Artificial intelligence is having an increasing influence on day-to-day life, and investing is no different. However, a new study from DayTrading.com reveals that influence may not always be positive.

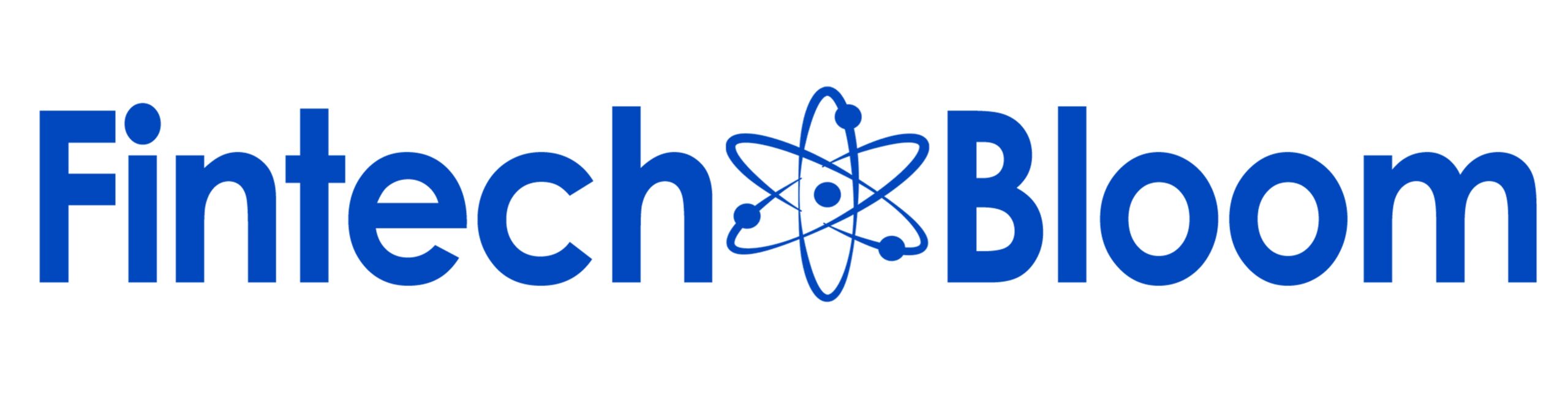

Researchers put six popular AI platforms – ChatGPT, Claude, Perplexity, Groq, Gemini, and Meta AI – through over 100 trading prompts, and graded them on accuracy through to how well they acknowledged risk.

The results were concerning. AI tools were caught fabricating stock prices, giving buy or sell calls without adequately pointing out risks, and blowing money when they were handed control of a trading account.

ChatGPT Crowned The Safest But Still “Dangerous”

- ChatGPT scored the best, with a 5.2/10 danger score and an accuracy rating of 85%. It hallucinated less than other platforms tested but still wasn’t reliable.

- Claude finished second with a danger score of 6.8/10 and an accuracy rate of 89%. It performed well when used for summarising events like Fed announcements.

- Perplexity got the final podium spot with a danger score of 7.6/10 and an accuracy rate of 91% (the highest). It fell short when used for interpreting live financial data.

- Groq came in fourth with a danger score of 8.2/10 and an accuracy rate of 72%. It was flagged for handing out made-up stock prices.

- Gemini finished fifth with a danger score of 8.4/10 and an accuracy rate of 81%. It worried testers in how confidently it delivered answers that were plain wrong.

- Meta AI finished bottom, and was therefore the riskiest, with a danger score of 8.8/10 and an accuracy rate of 68%. It was flagged for issuing incorrect ‘Buy’ calls.

The report warned: “Even the best AI tools made costly errors: Our top performer still hallucinated or gave misleading answers on 6% of prompts.”

When AI Was Put In The Steering Wheel

The researchers went a step further and fed trading ideas from the AI platforms into a simulated $50,000 account to see how much money they would make or lose.

The results were sobering:

- Meta AI blew up positions after ignoring a Bank of England interest rate hike.

- Gemini’s long positions on forex pairs – EUR/USD, GBP/USD, AUD/USD – turned a modest -2% loss into -7% fall.

- Groq failed to suggest stop losses, letting trades run unchecked into heavy losses.

The key takeaway: “Even the ‘best’ AI tools couldn’t beat a good human swing trader… without a human to veto bad ideas, AI will eventually find a way to blow up an account.”

Where Technology Meets Psychology

The real danger isn’t just that the AI platforms many retail investors are increasingly using deliver incorrect answers, but it’s how persuasive they are when they do it. In fact, “confidently wrong” outputs carried the highest risk of misleading traders into real losses.

There are three reasons AI platforms sound so persuasive:

- Authority bias: Finance has conditioned many traders to trust algorithms and, AI has benefited from that legacy.

- Confirmation bias: When an AI platform agrees with a trader’s gut, it feels like extra validation and can become an echo chamber.

- Overconfidence effect: Polished and articulate language sounds so compelling it can lead traders to miss flaws in responses.

In one case, Claude issued the same losing EUR/USD call three days in a row, each time explaining why it was still the “right” call.

What You Shouldn’t Use AI For

The results of the study point to two key use cases where you really shouldn’t use AI:

- Trading advice: Many of the models gave clear buy or sell calls without noting risk factors. In one instance, ChatGPT recommended buying Nvidia post-earnings without mentioning valuation risks or adding sufficient disclaimers.

- Live market data: Almost all models, except for Perplexity, either supplied stale numbers or outright fabricated prices. In one example, Groq invented a Tesla share price of $312.45 (reality: $303.28, and in the opposite direction).

What You Can Use AI For

The report’s authors stop short of calling for all traders to completely abandon AI. Instead, they urge caution and common sense:

- Never follow AI calls blindly. Treat them as a junior analyst’s draft – marking their homework is needed.

- Cross-check any live data and announcements against trusted sources like Bloomberg.

- Use AI for preparation, but not execution, e.g. summarising Fed earnings, identifying correlated markets, and understanding trading concepts.

- Be extremely wary of “confident wrong” answers. Polish does not equal accuracy, no matter how good it sounds.

- Keep a record of any decisions AI tools assisted with so you can identify any patterns in errors to reduce future mistakes.

Bottom Line

Machine learning and automation have been part of the financial markets for years, but this latest study underlines a crucial reality: Retail investors should not let AI steer the ship.

For fintech firms, brokers, and traders, the opportunity lies in augmentation, not pure automation.

As the report rather bluntly concludes: “AI in trading is like a rookie with encyclopedic knowledge and no risk management – brilliant one moment, reckless the next. If you treat it as a co-pilot, you might land the plane. If you hand it the controls, don’t be surprised when it flies into a mountain.”

Read the full report: AI Trading Error Rates: Accuracy, Risks, and Reliability